I’ve always had a love for the art in video games. Sure, all mediums have some ability to craft worlds from nothing, but none are so realized, so there, as the worlds in video games. It’s also an example of the interplay between “pure art” and computer technology. And in the case of the GameCube/Wii, technology from 1999, even! Yes, smart engineers played a large role in building game engines and tooling, but artists are very rarely appreciated, yet they are the ones responsible for what you see, what you hear, and often how you feel during a specific section. Not only do they model and texture everything, they control where the postprocessing goes, where the focus is pulled in each shot, and tell the programmers how to tweak the lighting on the hair. A good artist is a “problem solver” in much the same way that a good engineer is. They are the ones who decide how the game looks, from shot and scene composition to materials and lighting. The careful eye of a good art director is what turns a world from a pile of models to something truly special. Their work has touched the lives of so many over the past 30 years of video game history.

noclip.website, my side project, is a celebration of the incredible work that video game artists have created. It lets you explore some video game maps with a free camera and see things from a new perspective. It’s blown up to moderate popularity now, and as it’s now reaching 6 years of development from me in some ways, I figured I should say a few words about its story and some of the experiences I’ve had making it.

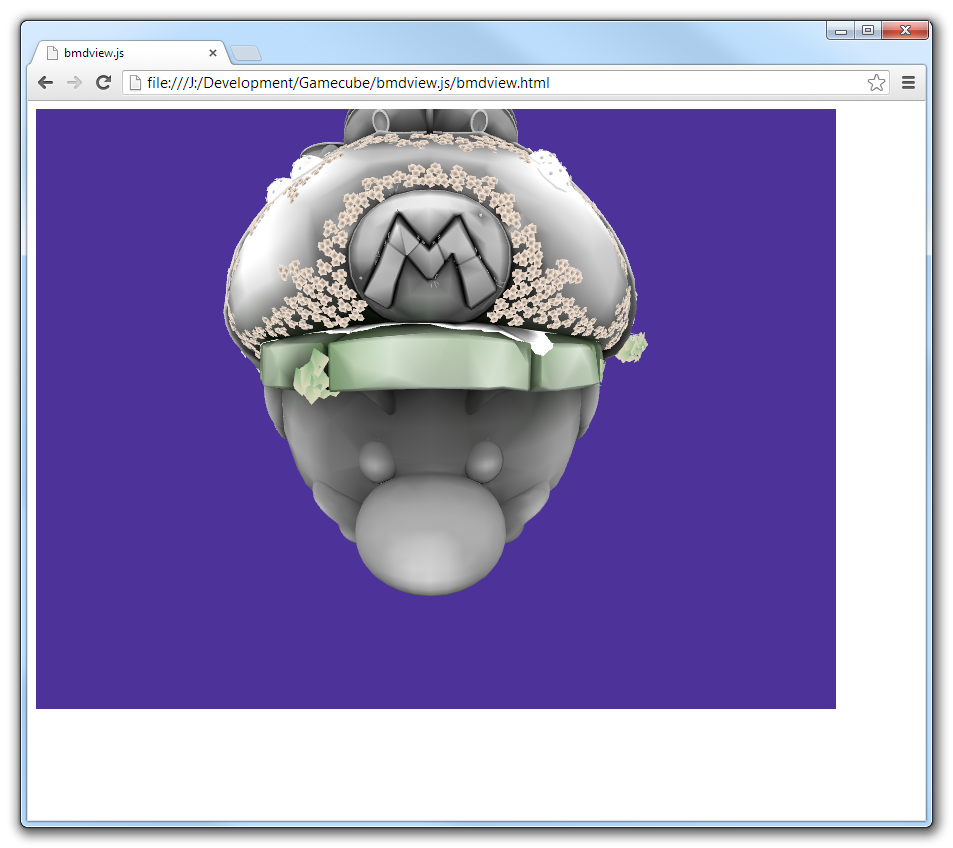

My first commit to an ancient noclip predecessor, bmdview.js, was made on April 11th, 2013. I was inspired by the works of amnoid’s bmdview and my own experiments with the tool. I had never written any OpenGL or graphics code before, but I managed to stumble through it through trial and error and persistence.

This is the first screenshot I can find of bmdview.js with something recognizable being drawn. It’s the Starship Mario from Super Mario Galaxy 2.

This is the first screenshot I can find of bmdview.js with something recognizable being drawn. It’s the Starship Mario from Super Mario Galaxy 2.

After this resounding initial success, I… put it down, apparently. I had a lot of side projects at the time: learning about GIF/JPEG compression, about the X Window System, and doing some game reverse engineering of my own. I revisited it a few times over the years, but a “complete emulation” always seemed out of reach. There were too many bugs and I really barely knew anything about the GameCube’s GPU.

A few years or so later, I was inspired enough to create an Ocarina of Time viewer, based off the work that xdaniel did for OZMAV. I can’t remember too many details; it’s still live and you can go visit it but I did have to un-bit-rot it for this blog post (JS API breakage; sigh). I really enjoyed this, so I continued with a few more tools, until I had the idea to combine them all. First came Super Mario 64 DS in October of 2016, and then zelview.js was added in 20 days after. I don’t have time to recount all of the incredible people who I either based my work on or have contributed to the project directly; the credits page should list most of them.

Keeping Momentum

Keeping a side-project going for this long requires momentum. When I started the project, I decided that I would try as hard as possible to prevent refactors. Refactoring code kills momentum. You do not want to write more code that will be changed by the refactor, so you stop all your progress while you get it done, and of course, as you change the code for this new idea you have, you encounter resistance and difficulty. You also have to change this. Or maybe you found your new idea doesn’t fit as well in all cases, so the resulting code is still as ugly as when you started. And you get discouraged and it’s not fun any more, so you decide not to work on it for a bit. And then “a bit” slowly becomes a year, and you get less guilty about not touching it ever again. It’s a story that’s happened to me before. *cough*.

In response, I optimized the project around me, and my goals. First up, it’s exciting, it’s thrilling to get a game up on screen. There’s nothing like seeing that initial hints of a model come through, even if it’s all contorted. It’s a drive to keep pushing forward, to know that you’re part of the way there. Arranging the code so I can get that first hint of game up on the screen ASAP is a psychological trick, but it’s an effective one. That rush has never gotten old, even after the 20 different games I’ve added.

Second, I’m probably the biggest user of my own site. Exploring the nooks and crannies of some nostalgic game is something I still do every day, despite having done it for 6 years. Whether it’s trying to figure out how they did a specific effect, or getting a new perspective on past nostalgia gone by, I’m always impressed with the ingenuity and inventiveness of game artists. So when I get things working, it’s an opportunity to explore and play. This is fun to work on not just because other people like it, but because I like it. So, when people ask me if I will add so-and-so game, the answer is: probably not, unless you step up to contribute. If I haven’t played it, it makes it harder for me to connect with it, and it’s less fun for me to work on.

Third, I decided that refactors were off-limits. To help with this, I wanted as little “abstractions” and “frameworks” as possible. I should share code when it makes sense, but never be forced to share code when I do not want it. For this, I took an approach inspired by the Linux kernel and built what they call “helpers” — reusable bits and bobs here and there to help cut down on boilerplate and common tasks, but are used on an as-needed basis. If you need custom code, you outgrow the training wheels, from the helpers to your own thing, perhaps by copy/pasting it, and then customizing it. Both tef and Sandi Metz have explored this idea before: code is easier to write than it is to change. When I need to change an idea that did not work out well, I should be able to do it incrementally — port small pieces of the codebase over to the new idea, and keep the old one around. Or just delete ideas that did not work out without large change to the rest of the code.

As I get older, I realize that the common object-oriented languages make it difficult to share code in better ways and too easily lock you into the constraints of your own API. When this API requires a class, it is less momentum to squeeze your own code to extend a base class rather than creating a new file for an interface, putting what you need in there, making your class inherit the interface, and then try to share code and build the helpers. TypeScript’s structurally typed interfaces, where any class can automatically match the interface, makes it easy to define new ones. And simply by not requiring a separate file for an interface, TypeScript makes them a lot easier to create, and so, you end up with more of them. Small changes to how we perceive something as “light” or “heavy” makes a difference for which one we reach for to solve a problem.

I am not saying that these opinions are the absolute right way to build code. There are tradeoffs involved: the amount of copy/paste code is something I can keep in my head, but someone else trying to maintain it might be frustrated by the amount of different places they might have to fix a bug. What I am saying is that this was originally intended as my side-project site, and so the choices I made were designed to keep me happy in my spare time. A team or a business? Those have different goals, and might choose different tradeoffs for their organization and structure. I do think I have had tremendous success with them.

The Web as a Platform

My hobby is breaking the web. I try to push it to its limits, and build experiences that people thought should never have been possible. This is easier than you might think, because there’s a tremendous amount of low-hanging fruit in web applications. There’s a lot that’s been said on performance culture, premature optimization, and new frameworks that emphasize “programmer velocity” at the expense of performance. That said, my experience as a game developer does crowd my judgement a bit. We’re one of the few industries where we are publicly scrutinized over performance metrics by an incessant and very often rude customer base, over numbers and metrics. Anything less than 30fps and you will be lambasted for “poor optimization”. Compare that to business software, where I’m not even sure my GMail client can reach 10fps. I recently discovered that Slack was taking up 10GB of memory.

A poor performance culture is baked into the web platform itself, through not just the frameworks, but the APIs and new language features as well. Garbage collection exists, but its cost is modelled to be free. Pauses caused by the garbage collector are the number one cause of performance issues that I’ve seen. Objects like Promises can cause a lot of GC pressure, but in most JavaScript libraries found on npm they are created regularly, sometimes multiple times per every API call. The iterator protocol is an absurd notion that creates a new object on every iteration because they did not want to add a hasNext method (too Java-y?) or use Python’s StopIteration approach. Its performance impact can be even worse — I’ve measured up to a 10fps drop just because I used a for…of to iterate over 1,000 objects per frame. We need to hold platform designers accountable for decisions that affect performance, and build APIs that take the garbage collector into account. Why can’t I reuse a Promise object in an object pool? Does this interface need a special dictionary, or can I get away without it?

Writing performant code is not hard. Yes, it takes care and attention and creative problem solving, but aren’t those the things that inspired you to the craft of computer science in the first place? You don’t need more layers, you don’t need more dependencies, you just need to sit down, write the code, and profile it.

Oh, by the way. I recommend TypeScript. It’s one of the few things I truly love about modern web development. Understand where it’s polyfilling for you, and definitely don’t always trust the compiler output without verifying it yourself, but it has earned my trust enough over time for it to be worth the somewhat minor pains it adds to the process.

WebGL as a Platform

OpenGL is dreadful. I say this as a former full-time Linux engineer who still has a heart for open-source. It’s antiquated, full of footguns and traps, and you will fall into them. As of v4.4, OpenGL gives you enough ways to avoid all of the bad ideas, but you still need to know which ideas are bad and why. I might do a follow-up post on those eventually. OpenGL ES 3.0, the specification that WebGL2 is based on, unfortunately has very few of those fixes. It’s an API very poorly suited for underpowered mobile devices, which is where it ended up being deployed.

OpenGL optimization, if you do not understand GPUs, can be a lot like reading the tea leaves. But the long and short of it is “don’t do anything that would cause a driver stall, do your memory uploads as close together as you can, and change state as little as possible”. Checking whether a shader had any errors compiling? Well you’ve just killed any threading the driver had. glUniform? Well, that’s data that’s destined for the GPU — you probably want to use a uniform buffer object instead so you can group all the parameters into one giant upload. glEnable(GL_BLEND)? You just recompiled your whole shader on Apple iDevices.

And, of course, this is to say nothing of the quirks that each driver has. OpenGL on Mac has a large number of issues and bugs, and, yes, those extend to the web too. ANGLE also has its own set of bugs, which, yes, I also have a workaround for.

The hope here is for modern graphics APIs to make their way to the web. Thankfully, this is happening with WebGPU. The goal is to cut down on WebGL overhead and provide something closer to the modern APIs like Vulkan and Metal. But this requires building your application in a way that’s more suitable to modern graphics APIs: thinking about things in terms of buffers, pipelines and draw calls. The one large refactor I allowed myself in the project was porting the whole platform to use my WebGPU-esque API, which I currently run on top of WebGL 2. Though, as an example of how exhausting that refactor was; even though I designed it so that I could work on all the games at separate times, with both the legacy and modern codepaths existing live on the site for months, it took me over 5 months to port all the different games, and I also had to remove zelview.js as a casualty. These things are exhausting, and I didn’t have the energy to push it through. I eventually was able to build on my N64 experience when it came time to build Banjo-Kazooie, and made something much better the second time around.

I ended up with a fairly unique renderer design I’m happy about — it’s similar to recent refactors like the one that showed up in Unreal 4.22. Perhaps in the future, I can make a post about modern game engine renderers, the different passes in play, and how they relate to the underlying platform layer, for those interested in writing your own.

The future

My main side project a few years ago was Xplain, an interactive series about the X Windowing System and its role in graphics history. I’m no longer working on Xplain. Some say I have a talent for clear, explanatative writing, but it takes me a long time to craft a story, lay out all the pieces, and structure it in a way to build intuition by layering pieces. I tried to reboot it a few years ago by reframing it as no longer about X11, hoping that would get me in the mood to write again, but no. It’s attached to a legacy I’m not particularly interested in exploring or documenting any further, and the site’s style and framework are not useful. I’m still interested in writing explanatory writing, but it will be here, like my post on Super Mario Sunshine’s water.

noclip is not my day job, it is my side project. It is a labor of love for me. It’s a website I have enjoyed working on for the past 6 years of my life. I don’t know how much longer it’ll continue beyond that — I’m feeling a bit burned out because of the large amount of tech support, bills to pay, and general… expectations? From people? They want all the games. I get it, I really do. I am ecstatic to hear that people like my work and my tool enough to know that they want their game in there too. But at some point, I’m going to need a bit of a break. To help continue and foster research, I decided to fund it myself for a game I’ve long wanted on the site while I take it a bit easier. If this is succesful, I plan to do more of it. I hope to build a community of contributors to the project. Having a UI designer or frontend developer would be nice. If you want to contribute, please join the Discord! Or, at least show your appreciation in the comments. For more interesting video game snippets, I post pretty frequently on my Twitter now. If you made it all the way to the bottom here, thank you. I hope it was at least interesting.